Validating Mobile Diet Tracking Apps: A Research-Grade Assessment for Biomedical Applications

This article provides a critical evaluation of mobile diet tracking applications against traditional research-grade methods like weighed food records and 24-hour recalls.

Validating Mobile Diet Tracking Apps: A Research-Grade Assessment for Biomedical Applications

Abstract

This article provides a critical evaluation of mobile diet tracking applications against traditional research-grade methods like weighed food records and 24-hour recalls. Aimed at researchers, scientists, and drug development professionals, it explores the foundational principles of dietary assessment, examines the integration of artificial intelligence for enhanced accuracy, identifies key methodological challenges and biases, and presents a framework for the validation and comparative analysis of these digital tools in clinical and epidemiological research settings.

The New Frontier in Dietary Assessment: From Memory-Based Methods to AI-Driven Tracking

In epidemiological and clinical research, the accurate assessment of food intake and usual dietary consumption represents a fundamental requirement for understanding diet-disease relationships and confounding factors in intervention studies [1]. Dietary parameters serve as key determinants when investigating chronic conditions such as obesity, type 2 diabetes, cardiovascular diseases, and cancer [1]. Furthermore, in drug development studies, assessing background diet is crucial for identifying food-drug interactions, where chemical compounds in foods can potentially affect pharmacokinetic, pharmacodynamic, or metabolic pathways of pharmaceutical agents [1].

The established methods for collecting dietary data—including food records, food frequency questionnaires (FFQs), 24-hour dietary recalls, and diet history—have supported nutritional epidemiology for decades. However, these conventional approaches harbor significant limitations that can compromise data quality and subsequent research conclusions. This article examines these limitations through the lenses of recall bias, researcher bias, and measurement errors, while validating emerging mobile diet-tracking applications against research-grade methods.

Limitations of Traditional Dietary Assessment Methods

Recall Bias: The Fallibility of Memory

Food Frequency Questionnaires and 24-hour recalls inherently depend on participant memory, leading to systematic errors in reporting. Participants frequently struggle to accurately recall types and quantities of foods consumed, especially over extended periods. This problem is exacerbated for complex dishes, infrequently eaten items, or during unstructured eating occasions. The direction of this bias is often non-random; individuals with obesity may underreport energy intake more frequently than those with normal weight, potentially distorting observed diet-disease relationships in epidemiological studies [1].

Researcher Bias: Subjectivity in Data Collection and Coding

Traditional methods introduce multiple opportunities for researcher influence throughout data collection and processing. During 24-hour dietary recalls, interviewers may unconsciously prompt participants in ways that steer responses toward socially desirable answers. In the subsequent coding phase, researchers must interpret dietary descriptions and match them to appropriate food composition database items, a process requiring subjective judgment that can vary significantly between analysts [1]. This coding variability introduces measurement error that is often difficult to quantify.

Measurement Errors: The Burden of Implementation

All traditional dietary assessment tools impose significant participant burdens, which can affect data quality. Detailed food records demand substantial time commitment and literacy skills, potentially altering normal eating patterns—a phenomenon known as reactivity [1]. Furthermore, the temporal alignment between dietary intake and biological parameters is often misaligned in research settings; for instance, gut microbiota composition exhibits daily variation related to food choices, but traditional methods rarely capture this dynamism effectively [1].

Mobile Diet-Tracking Apps: A Comparative Validation Framework

Classification of Mobile Dietary Assessment Tools

Mobile diet-tracking applications fall into two primary categories with distinct characteristics and intended uses:

- Academic Apps: Developed by nutrition and dietetics experts specifically for research purposes, these tools prioritize scientific validation and methodological rigor. Examples include Electronic Dietary Intake Assessment (e-DIA), DietCam, and My Meal Mate [1]. Their strengths include scientific oversight, validated methodologies, and enhanced privacy protections, though they often lack the polished user experience of commercial alternatives [1].

- Consumer-Grade Apps: Developed primarily by private entities for public use, these applications focus on usability and widespread adoption. Popular examples include MyFitnessPal, FatSecret, and Lose It! [1]. While offering extensive food databases and user-friendly features, they may prioritize commercial objectives over scientific accuracy [1].

Experimental Protocols for App Validation

Research validating mobile diet-tracking apps against reference methods typically follows structured experimental protocols:

- Food Item Selection: Studies identify frequently consumed food items from prior dietary records or population consumption patterns. For example, validation research may select 42 unique food items across categories like eggs, beef, pork, chicken, dairy, seafood, and processed foods to ensure representativeness [2].

- Standardized Comparison: Researchers enter selected food items into multiple applications, extracting nutrient values for standardized portions (typically 100g) [2]. This enables direct comparison without portion size confounding.

- Reference Database Alignment: App-generated nutrient values are compared against gold-standard references such as the USDA Food Composition Database or other national nutrient databases [2] [3].

- Statistical Analysis: Studies employ various statistical methods including percentage error calculations, coefficients of variation, one-way ANOVAs, and paired t-tests to quantify differences between apps and reference databases [2].

Comparative Experimental Data: Accuracy and Reliability Assessment

Macronutrient Tracking Accuracy

Table 1: Macronutrient Accuracy of Consumer-Grade Diet Apps Compared to USDA Reference Database [3]

| Application | Energy (%) | Carbohydrates (%) | Protein (%) | Fat (%) |

|---|---|---|---|---|

| LifeSum | +1.2 | +0.8 | +9.6 | -5.8 |

| MyFitnessPal | +1.8 | +1.2 | +11.2 | -7.1 |

| Lose It! | +1.1 | +0.9 | +10.1 | -6.3 |

| FatSecret | +1.5 | +1.1 | +10.8 | -6.9 |

| Average Difference | +1.4 | +1.0 | +10.4 | -6.5 |

Specific Nutrient Underreporting in Cardiovascular Health Applications

Table 2: Saturated Fat and Cholesterol Underreporting in Diet Apps (2024 Study) [2]

| Application | Saturated Fat Error (%) | Cholesterol Error (%) | Saturated Fat Omission (%) | Cholesterol Omission (%) |

|---|---|---|---|---|

| COFIT | -40.3* | -60.3* | 47 | 25 |

| MyFitnessPal-Chinese | -13.8* | -26.3* | 15 | 62 |

| MyFitnessPal-English | -22.5* | -35.7* | 18 | 28 |

| Lose It! | -25.1* | -42.8* | 22 | 31 |

| Formosa FoodApp | -5.2 | -8.9 | 5 | 12 |

*Statistically significant (P < 0.05)

Data Inconsistency Across Food Categories

The coefficient of variation for saturated fat values in consumer-grade apps shows concerning variability across food categories: beef (78-145%), chicken (74-112%), and seafood (97-124%) [2]. Similarly, cholesterol variability remains high in dairy products (71-118%) and prepackaged foods (84-118%) across all selected apps [2]. This high variability indicates inconsistent data quality within apps themselves, not just systematic underreporting.

Decision Framework for Research Application Selection

Table 3: Research Reagent Solutions for Dietary Assessment Validation

| Tool Category | Specific Resource | Research Function |

|---|---|---|

| Reference Databases | USDA Food Composition Database | Gold-standard reference for nutrient values [2] [3] |

| Taiwan Food Composition Database | Regional reference database for validation studies [2] | |

| Validation Frameworks | System Usability Scale (SUS) | Quantifies application usability and user experience [3] |

| Theoretical Domains Framework (TDF) | Evaluates behavior change construct integration [3] | |

| Statistical Methods | Percentage Error Calculation | Quantifies deviation from reference values [2] |

| Coefficient of Variation | Measures internal consistency and variability [2] | |

| Mobile App Testing Tools | Android Profiler / Xcode Instruments | Assesses technical performance across devices [4] |

| Firebase Performance Monitoring | Tracks application launch time and API latency [4] |

Traditional dietary assessment methods remain limited by significant recall bias, researcher subjectivity, and measurement errors that can compromise research validity. Mobile diet-tracking applications offer promising alternatives with reduced participant burden and potential for real-time data capture, but require careful scientific validation before research implementation.

Current evidence indicates that consumer-grade applications demonstrate reasonable accuracy for tracking energy and carbohydrates, making them potentially suitable for general monitoring purposes. However, significant limitations persist regarding systematic underreporting of specific nutrients—particularly saturated fats and cholesterol—high rates of data omission, and substantial variability across food categories. These deficiencies render them problematic for research requiring precise nutrient quantification, especially in cardiovascular disease studies.

Academic apps developed with scientific oversight generally demonstrate superior accuracy and reliability, though may lack the extensive food databases and polished user interfaces of their commercial counterparts. Researchers should select dietary assessment tools through careful alignment with study objectives, prioritizing validated academic applications for nutrient-specific investigations and considering consumer-grade tools only for general monitoring with appropriate caveats regarding their limitations.

Accurate dietary assessment is fundamental to nutritional epidemiology, yet traditional methods like food frequency questionnaires (FFQs) and 24-hour recalls are plagued by recall bias, participant burden, and estimation errors [5]. The emergence of Artificial Intelligence-Based Dietary Intake Assessment (AI-DIA) represents a transformative approach that leverages computer vision, deep learning, and multimodal large language models (LLMs) to automate and objectify the process of food intake analysis [6] [7]. This technological shift addresses critical limitations in traditional methods, particularly the consistent underreporting of energy intake, which is especially problematic in obesity research and clinical nutrition [6]. As AI-DIA systems evolve from research prototypes to commercially available applications, validating their accuracy against research-grade methods becomes imperative for researchers, clinical scientists, and drug development professionals who rely on precise nutritional data.

AI-DIA technologies typically employ convolutional neural networks (CNNs) for food detection and classification, with more recent architectures incorporating end-to-end deep learning pipelines that process digital food images to estimate volume, energy, and nutrient content with minimal human intervention [6] [8]. These systems are increasingly deployed in mobile health applications that enable real-time dietary monitoring while significantly reducing participant burden [9] [10]. The validation of these technologies against established reference methods forms a critical research focus, with studies comparing AI estimations to weighed food records, doubly labeled water, and assessments by registered dietitians [6] [5].

Performance Metrics: How AI-DIA Compares to Established Methods

Systematic reviews of AI-DIA validation studies reveal that AI methods achieve accuracy levels comparable to—and potentially exceeding—human estimations for certain food types [6]. A 2023 systematic review analyzing 52 studies found that average relative errors for calorie estimation ranged from 0.10% to 38.3% when compared to ground truth measurements, while volume estimation errors ranged from 0.09% to 33% [6]. Performance was significantly better for images containing single or simple foods compared to complex mixed meals, highlighting a continuing challenge in the field.

A 2025 systematic review specifically examining the validity and accuracy of AI-DIA methods reported that six out of thirteen studies demonstrated correlation coefficients exceeding 0.7 for calorie estimation when comparing AI methods to traditional assessment approaches [5] [11]. Similarly, six studies achieved correlations above 0.7 for macronutrient estimation, while four studies reached this threshold for micronutrients [5]. These correlations indicate strong agreement with reference methods, though with variation across nutrients and food types.

Table 1: Performance Metrics of AI-DIA Systems from Recent Validation Studies

| Measurement Type | Performance Range | Key Findings | Primary Limitations |

|---|---|---|---|

| Calorie Estimation | Relative error: 0.10%-38.3% [6]; Correlation: >0.7 in 6/13 studies [5] | Similar accuracy to human estimators for simple foods [6] | Performance decreases with mixed meals, occlusions [7] |

| Volume Estimation | Relative error: 0.09%-33% [6] | Potential to exceed human estimation accuracy [6] | Lacks depth information in 2D images [6] |

| Macronutrient Estimation | Correlation: >0.7 in 6/13 studies [5] | Strongest for carbohydrates, proteins [5] | High variability for fats [12] |

| Food Classification | 79% of studies used CNN architectures [6] | High accuracy on large, standardized datasets [6] | Limited by database coverage of regional foods [7] |

Performance of Large Language Models in Dietary Assessment

Recent research has evaluated the emergent capabilities of general-purpose multimodal LLMs for nutritional estimation from food images. A 2025 study compared three leading models—ChatGPT-4o, Claude 3.5 Sonnet, and Gemini 1.5 Pro—using standardized food photographs with reference objects for scale [8]. The models were tasked with identifying food components and estimating nutritional content, with results compared against values obtained through direct weighing and nutritional database analysis.

Table 2: Performance Comparison of Multimodal LLMs in Dietary Assessment (Adapted from [8])

| Model | Mean Absolute Percentage Error (MAPE) Weight Estimation | MAPE Energy Estimation | Correlation with Reference Values | Systematic Bias Pattern |

|---|---|---|---|---|

| ChatGPT-4o | 36.3% | 35.8% | 0.65-0.81 | Underestimation increasing with portion size |

| Claude 3.5 Sonnet | 37.3% | 35.8% | 0.65-0.81 | Underestimation increasing with portion size |

| Gemini 1.5 Pro | 64.2%-109.9% | 64.2%-109.9% | 0.58-0.73 | Underestimation increasing with portion size |

The study found that ChatGPT and Claude demonstrated similar accuracy levels comparable to traditional self-reported dietary assessment methods, but without the associated user burden [8]. However, all models exhibited systematic underestimation that increased with portion size, with bias slopes ranging from -0.23 to -0.50 [8]. This consistent pattern suggests that current general-purpose LLMs, while promising, are not yet suitable for precise dietary assessment in clinical or athletic populations where accurate quantification is critical.

Mobile Application Accuracy in Real-World Settings

Validation studies of consumer-facing nutrition applications reveal significant variability in their agreement with reference methods. A 2021 study comparing five popular apps (FatSecret, YAZIO, Fitatu, MyFitnessPal, and Dine4Fit) against the Polish reference method (Dieta 6.0) found that all apps tended to overestimate energy intake [12]. When applying strict criteria (±5% as perfect agreement, ±10% as sufficient agreement), none of the apps could be recommended as a replacement for the reference method for scientific or clinical use [12].

The study employed Bland-Altman analysis to assess agreement, finding the smallest bias for energy, protein, and fat intake in Dine4Fit (-23 kcal; -0.7 g, 3 g respectively), though with wide limits of agreement [12]. For carbohydrate intake, the lowest bias was observed with FatSecret and Fitatu [12]. These results highlight the critical limitations of consumer-grade apps for research applications, despite their popularity and convenience.

Experimental Protocols for AI-DIA Validation

Standardized Validation Methodology

Rigorous validation of AI-DIA systems requires standardized protocols that enable direct comparison with established reference methods. The predominant approach involves collecting digital food images under controlled conditions with simultaneous ground truth measurements, typically through direct weighing of foods (weighed food records) or doubly labeled water for energy intake validation [6] [5].

A typical validation protocol follows these key stages:

Food Image Acquisition: Standardized photography of individual food items and complete meals under controlled lighting conditions, often including a reference object for scale (e.g., checkered placemat, fiducial marker, or standard cutlery) [8]. Studies typically analyze between 576 to 130,517 images, with variability depending on scope and resources [5].

Ground Truth Establishment: Simultaneous measurement of the actual foods using reference methods: weighed food records for portion size [6], calculation using nutrient tables for energy and nutrient content [6], or doubly labeled water for total energy expenditure validation [6].

AI System Processing: Feeding images through the AI-DIA system for automated food identification, portion size estimation, and nutrient calculation using integrated food composition databases [7].

Statistical Comparison: Calculating agreement metrics between AI estimates and ground truth, including relative error ((|actual - estimated|/actual)*100) [6], correlation coefficients [5], mean absolute percentage error (MAPE) [8], and Bland-Altman analysis for assessing systematic bias [10] [12].

The Ghithaona application validation study conducted among Palestinian undergraduates exemplifies a robust validation approach [10]. Researchers compared dietary intake assessments from the AI-DIA application against 3-day food records (3-DFR) in a sample of 70 participants. They collected dietary data using both methods, with the 3-DFR administered in the second week following app use to minimize conditioning effects. Statistical analysis included paired t-tests for mean differences, Pearson correlations for agreement, and Bland-Altman plots to visualize limits of agreement [10].

Specialized Protocols for LLM Evaluation

The evaluation of multimodal LLMs for dietary assessment requires specialized protocols that account for their unique capabilities and limitations. The 2025 study evaluating ChatGPT-4o, Claude 3.5 Sonnet, and Gemini 1.5 Pro employed this rigorous methodology [8]:

Standardized Food Photographs: 52 standardized images including individual food components (n=16) and complete meals (n=36) across three portion sizes (small, medium, large).

Reference Objects: All photographs included visible cutlery and plates of standard dimensions to provide size references for estimation.

Consistent Prompting: Identical prompts were used across all models: "Identify the food components in this image and estimate the weight (g), energy content (kcal), and macronutrient composition (carbohydrates, protein, fat in g). Use the visible cutlery and plates as size references."

Reference Values: Obtained through direct weighing of food components and nutritional database analysis (Dietist NET).

Performance Metrics: MAPE, Pearson correlations, and systematic bias analysis using Bland-Altman plots with bias slopes.

This protocol revealed the systematic underestimation bias common across all models and quantified the performance differences between them [8].

Table 3: Essential Research Reagents and Tools for AI-DIA Validation Studies

| Tool/Resource | Function/Purpose | Examples/Standards |

|---|---|---|

| Reference Method | Ground truth establishment for validation | Weighed food records, doubly labeled water, dietitian assessment [6] [5] |

| Standardized Food Image Databases | Training and testing AI models | Large-scale, culturally diverse datasets with nutritional annotation [6] [7] |

| Food Composition Databases | Nutrient calculation reference | Country-specific databases (e.g., USDA, Polish Food Composition DB) [12] |

| Portion Size Estimation Aids | Visual reference for volume estimation | Atlas of photographs, household measures, reference objects [10] [12] |

| Statistical Analysis Tools | Agreement metrics calculation | Bland-Altman analysis, correlation coefficients, relative error [6] [10] |

| Validation Frameworks | Standardized evaluation protocols | PRISMA guidelines for systematic reviews, controlled clinical trials [5] |

AI-DIA technologies have reached a stage of development where their accuracy for calorie and macronutrient estimation is comparable to traditional self-reported methods, with the significant advantage of reduced participant burden [6] [8]. However, systematic challenges remain, including portion size underestimation (particularly for larger portions), limited performance with mixed meals, and inadequate representation of culturally diverse foods in training databases [6] [7] [8].

For research applications, current evidence suggests that AI-DIA systems are not yet ready to replace reference methods in clinical trials or studies requiring precise quantification [8] [12]. However, they show significant promise for large-scale nutritional surveillance, longitudinal monitoring studies, and personalized nutrition interventions where relative changes rather than absolute values are of primary interest [7] [10].

The field requires continued development focused on several key areas: (1) creating large-scale, culturally diverse food image databases with adequate nutritional annotation; (2) improving portion size estimation algorithms, particularly for complex mixed dishes; (3) establishing standardized validation protocols to enable cross-study comparisons; and (4) addressing systematic biases in current models [6] [7]. As these challenges are addressed, AI-DIA systems are poised to become increasingly valuable tools for researchers and clinicians seeking accurate, low-burden dietary assessment methods.

The integration of artificial intelligence (AI) into nutritional sciences is fundamentally reshaping research methodologies and expanding the boundaries of dietary assessment. As a critical component of a broader thesis on validating mobile diet tracking apps against research-grade methods, understanding these core AI technologies becomes paramount. AI, particularly through its subfields of machine learning (ML), deep learning (DL), and data mining, offers innovative solutions to overcome traditional limitations in nutrition research, such as self-reporting inaccuracies, complex dietary pattern analysis, and the personalization of dietary advice [13]. These technologies demonstrate remarkable versatility in handling complex, multidimensional relationships within nutritional datasets, enabling researchers to extract meaningful insights from vast amounts of dietary, biochemical, and health-related data [13]. This guide provides a comparative analysis of these core AI techniques, focusing on their operational mechanisms, applications in dietary validation studies, and supporting experimental data, equipping researchers with the knowledge to critically evaluate and implement these technologies in rigorous scientific inquiry.

Core AI Techniques Demystified: A Comparative Framework

At the heart of modern nutritional informatics lie three distinct yet interconnected AI paradigms. Their comparative strengths and operational characteristics are foundational to selecting the appropriate tool for validation research.

Table 1: Comparative Analysis of Core AI Techniques in Nutrition

| AI Technique | Primary Function | Common Algorithms/Models | Typical Applications in Nutrition | Data Requirements |

|---|---|---|---|---|

| Machine Learning (ML) | Identify patterns and make predictions from structured data. | Random Forests, Support Vector Machines (SVM), Collaborative Filtering [13]. | Predictive modeling for disease risk, personalized nutrition recommendation systems [13]. | Large volumes of structured data (e.g., nutrient databases, health records). |

| Deep Learning (DL) | Process and interpret complex, unstructured data through layered neural networks. | Convolutional Neural Networks (CNN), ResNet, EfficientNet [13]. | Food recognition from images, automated dietary assessment via photo analysis [13]. | Very large datasets of unstructured data (e.g., thousands of food images). |

| Data Mining | Discover previously unknown patterns and relationships in large datasets. | Conditional Random Fields (CRF), Named-Entity Recognition (NER) models [14]. | Text mining of scientific literature for food-disease associations, nutrient information extraction [14]. | Large, often textual, datasets (e.g., biomedical literature, electronic health records). |

The application of these techniques is often sequential and complementary. Data mining can structure unstructured text from scientific literature or food logs. ML models then use this structured data to predict health outcomes or personalize recommendations. Meanwhile, DL operates on the front lines of data acquisition, transforming raw images of food into quantifiable dietary data, thereby automating the first and most error-prone step in many dietary assessments [13] [14].

Experimental Validation: Assessing the Accuracy of AI-Driven Tools

A critical step in the research pipeline is validating the output of AI-driven tools, such as consumer-grade mobile diet apps, against research-grade reference methods (RMs). These studies typically involve entering standardized dietary records into various applications and comparing the calculated energy and nutrient intakes against gold-standard databases or software.

Table 2: Summary of Validation Studies on Nutrition Apps' Accuracy

| Reference Study | Apps Tested | Reference Method (RM) | Key Finding (Energy) | Key Finding (Macronutrients) |

|---|---|---|---|---|

| Tosi et al. (2021) [15] | FatSecret, Lifesum, MyFitnessPal, Yazio, Melarossa. | Food Composition Database for Epidemiologic Studies in Italy (BDA). | Apps tended to underestimate total energy intake [15]. | General underestimation of lipids and carbs; proteins overestimated by some apps [15]. |

| Wadolowska et al. (2021) [12] | FatSecret, YAZIO, Fitatu, MyFitnessPal, Dine4Fit. | Polish RM (Dieta 6.0 software). | Apps tended to overestimate energy intake [12]. | Mixed over- and under-estimation of macronutrients; no app was a perfect substitute for the RM [12]. |

| Chen et al. (2019) [16] | LifeSum, MyFitnessPal, Lose It!, others. | USDA Food Composition Database. | Average difference of +1.4% for calories vs. USDA [16]. | Accurate for carbs (+1.0%), less so for protein (+10.4%) and fat (-6.5%) [16]. |

Detailed Experimental Protocol

To ensure reproducibility, the standard validation protocol is outlined below. This methodology is adapted from the rigorous approaches used in the cited studies [15] [12].

- App Selection: Identify apps based on predefined, objective criteria such as popularity (e.g., >1 million downloads), user ratings (e.g., >4 stars), language availability, and the presence of a food composition database [15] [12].

- Test Data Preparation: Utilize existing dietary records from prior studies, typically comprising 2-3 day food diaries or 24-hour recalls collected by trained interviewers. Portion sizes are verified using photographic atlases or standard measures [12].

- Data Entry: Experienced dietitians enter the test data into each target app and the RM software to minimize user error. All food items are matched as closely as possible.

- Data Analysis: The energy and nutrient outputs from the apps are systematically compared to the RM. Statistical analyses include:

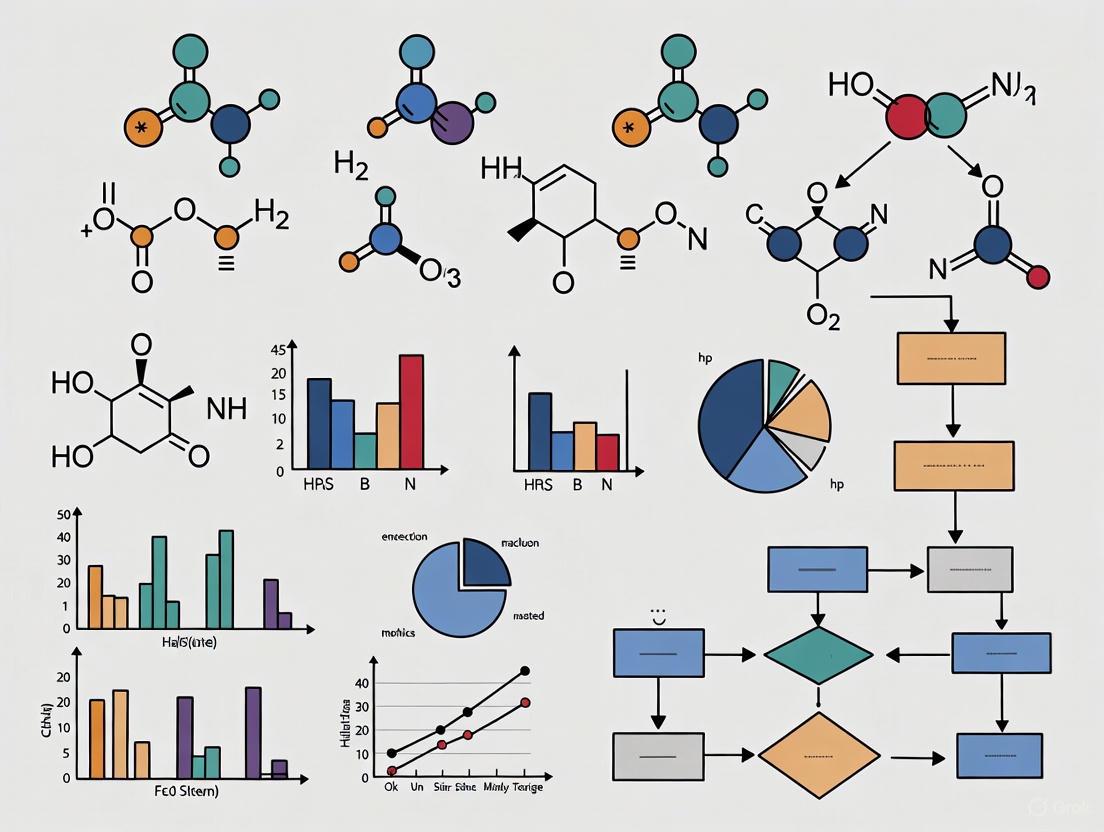

The workflow for this validation process is systematic and can be visualized as follows:

To conduct rigorous validation studies and advance the field of AI in nutrition, researchers rely on a suite of key resources. The following table details these essential tools and their functions.

Table 3: Key Research Reagent Solutions for AI Nutrition Validation Research

| Resource Category | Specific Example | Function in Research |

|---|---|---|

| Reference Food Composition Databases (FCDs) | USDA Food Composition Databases [16], Italian BDA [15], Polish Food Composition Database [12]. | Serve as the gold standard for calculating the energy and nutrient content of test diets; critical for validating the output of consumer apps. |

| Research-Grade Dietary Analysis Software | Dieta 6.0 (Poland) [12], Nutrition Data System for Research (NDSR) [9]. | Professional software used in clinical and research settings to analyze dietary intake data based on reference FCDs; often used as the comparator in validation studies. |

| Biomedical Named-Entity Recognition (NER) Tools | FooDCoNER, FoodIE, NCBO Annotator [14]. | Data mining tools that automatically scan and extract food, nutrient, and phytochemical terms from unstructured scientific literature, enabling large-scale evidence synthesis. |

| National Dietary Surveillance Data | What We Eat in America (WWEIA), NHANES [17]. | Nationally representative datasets on food and nutrient consumption; used to understand population-level dietary patterns and inform model training. |

The objective comparison of core AI techniques reveals a dynamic and rapidly evolving landscape. While ML, DL, and data mining each offer powerful capabilities, validation studies consistently show that consumer-grade applications relying on these technologies are not yet perfect substitutes for research-grade methods [15] [12]. The observed discrepancies in energy and nutrient estimation are often attributed to the use of non-country-specific or unverified food composition databases within apps [15] [12]. Future work must focus on the development of standardized, transparent, and high-quality frameworks for the design and validation of AI-driven nutritional tools. For researchers and drug development professionals, this underscores the necessity of critical appraisal and rigorous in-house validation before integrating these tools into clinical trials or public health recommendations. The convergence of these AI technologies holds the promise of revolutionizing personalized nutrition, but its foundation must be built upon robust, reproducible, and validated science.

Traditional dietary assessment methods, such as paper-based food diaries (FDs) and 24-hour dietary recalls (24HRs), have long been the standard in clinical and research settings. However, these methods face significant limitations, including reliance on participant memory, high literacy requirements, and substantial participant burden, which can compromise data accuracy [9]. The emergence of mobile health (mHealth) applications represents a paradigm shift in dietary assessment, offering potential solutions to these long-standing challenges through technological innovation.

This guide objectively evaluates the performance of mobile diet tracking applications against traditional research-grade methods, focusing on three core advantages: real-time data capture, reduced participant burden, and objective logging. We present comparative experimental data from validation studies to inform researchers, scientists, and drug development professionals about the capabilities and limitations of these digital tools in rigorous scientific contexts.

Comparative Performance Data: Mobile Apps vs. Traditional Methods

Accuracy of Energy and Macronutrient Assessment

The validity of nutritional data generated by mobile applications varies significantly across platforms and nutrients. The following table summarizes findings from controlled studies that compared popular dietary apps against reference methods.

Table 1: Accuracy of Mobile Applications in Assessing Energy and Macronutrient Intake

| Application Name | Energy Intake Accuracy | Carbohydrate Assessment | Protein Assessment | Fat Assessment | Reference Method |

|---|---|---|---|---|---|

| MyFitnessPal | Overestimated by 7.0% in lab setting [18] | Variable by study [12] [15] | Variable by study [12] [15] | Generally underestimated [15] | Weighed food [18] |

| FatSecret | Tendency to underestimate [15] | Lowest bias among tested apps [12] | N/A | N/A | Food composition database [15] |

| YAZIO | Overestimated by 5.4 kcal average per item [15] | Generally underestimated [15] | Overestimated [15] | Generally underestimated [15] | Italian Food Composition Database [15] |

| Lifesum | Minimal underestimation (-2 kcal average per item) [15] | Generally underestimated [15] | Overestimated [15] | Generally underestimated [15] | Italian Food Composition Database [15] |

| Dine4Fit | Smallest bias (-23 kcal) [12] | N/A | Smallest bias (-0.7 g) [12] | Smallest bias (3 g) [12] | Polish Dieta 6.0 [12] |

| PortionSize | Underestimated by 13.3% in lab setting [18] | N/A | N/A | N/A | Weighed food [18] |

Usability and Adherence Metrics

User engagement and adherence patterns differ substantially between traditional and digital dietary assessment methods, impacting data quality and study completion rates.

Table 2: Usability and Adherence Comparison Between Assessment Methods

| Assessment Method | System Usability Scale (SUS) Score | Adherence Rate | Key Adherence Findings |

|---|---|---|---|

| Paper-Based Food Diaries | Not systematically assessed | Declines over time [19] | High participant burden; tedious nature; misplacement issues [19] |

| Mobile Applications (Overall) | 82% (9/11) received favorable scores [9] | Variable by platform | Immediate feedback improves sustained engagement [19] |

| Bitesnap | Favorable SUS score [9] | N/A | Flexible dietary and food timing functionality [9] |

| App-Based Monitoring (FatSecret) | N/A | 50.1% frequency rate over 8 weeks [19] | Consistent self-monitoring associated with significant weight loss (1.5±2.1 kg) [19] |

Experimental Protocols for Validation Studies

Laboratory-Based Validation Protocol

The gold standard for validating dietary assessment applications involves controlled laboratory studies with weighed food components:

Study Design: Randomized crossover design where participants use multiple applications to estimate intake in a laboratory setting [18]

Food Preparation: Participants are provided with pre-weighed plated meals with exact gram measurements recorded for all components [18]

Intake Estimation: Participants use assigned applications to log their food intake after consumption, with leftovers also weighed to calculate actual consumption [18]

Equivalence Testing: Statistical analysis using two one-sided t-tests (TOST) assesses equivalence between application estimates and weighed food values, typically using ±21% bounds [18]

Error Calculation: Relative absolute error is calculated for energy and nutrients, with comparison between applications using dependent samples t-tests [18]

Free-Living Validation Protocol

Real-world validation studies employ different methodologies to assess application performance under normal living conditions:

Reference Method Selection: Studies typically use country-specific reference software (e.g., Dieta 6.0 in Poland, Food Composition Database for Epidemiologic Studies in Italy) as the comparison standard [12] [15]

Dietary Records: Participants complete traditional dietary records (typically 2-3 days) with portion sizes verified using photographic atlases or household measures [12]

Data Entry: Experienced dietitians enter the same dietary data into both the reference software and mobile applications being tested [12]

Statistical Analysis: Bland-Altman plots assess agreement between methods, calculating bias and limits of agreement for energy and macronutrients [12] [15]

Cross-Classification Analysis: Evaluates how applications categorize participants into low, medium, and high intake groups compared to reference method [20]

Diagram 1: Dietary App Validation Workflows

Key Advantages of Mobile Diet Tracking Applications

Real-Time Data Capture

Mobile applications facilitate immediate dietary logging at the point of consumption, significantly reducing reliance on memory that plagues traditional recall methods:

Temporal Precision: 73% (8 of 11) of reviewed apps automatically record food time stamps, with 36% (4 of 11) allowing users to edit these time stamps for accuracy [9]. This capability is particularly valuable for circadian rhythm research and studies exploring meal timing effects on metabolism [9].

Ecological Momentary Assessment: Data capture occurs in natural environments rather than artificial clinical settings, providing more representative information about actual eating behaviors and contexts [21].

Immediate Feedback: Users receive real-time information about nutritional intake, which not only supports behavior change but also enhances data accuracy by allowing immediate correction of logging errors [19].

Reduced Participant Burden

Digital tools decrease the time, effort, and cognitive load required for comprehensive dietary self-monitoring:

Automated Calculations: Applications automatically sum nutrient intake and compare against goals, eliminating manual calculations required in paper diaries [9].

User-Friendly Interfaces: 82% (9 of 11) of evaluated apps received favorable System Usability Scale scores, indicating generally intuitive designs that require minimal instruction [9].

Lower Barrier to Entry: Compared to traditional methods that require literacy and mathematical skills, app-based tracking utilizes visual interfaces, barcode scanning, and voice input options that accommodate diverse user capabilities [12].

Objective Logging and Data Integrity

Digital platforms enhance data quality through automated processes and reduced subjectivity:

Standardized Food Databases: Applications utilize consistent nutritional databases across users, eliminating variability in individual calculations of nutrient content [12] [15].

Reduced Transcription Errors: Direct electronic capture minimizes data handling errors that can occur when transcribing paper records to digital formats for analysis [9].

Automated Portion Size Estimation: Advanced applications incorporate image-based portion size estimation, reducing subjectivity in portion assessment compared to verbal descriptions or household measures [9].

Diagram 2: Problem-Solution Framework for Dietary Assessment

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for Dietary Assessment Validation Research

| Tool Category | Specific Examples | Research Function | Key Characteristics |

|---|---|---|---|

| Reference Databases | Nutrition Data System for Research (NDSR) [9], Polish Food Composition Database [12], Italian Food Composition Database for Epidemiologic Studies [15] | Gold standard comparison for nutrient calculations | Country-specific, scientifically validated, regularly updated |

| Validation Methodologies | Doubly Labeled Water (DLW) technique [22], Weighed Food Protocol [18] | Objective measures to assess validity of self-reported intake | Considered reference standards independent of self-report errors |

| Statistical Tools | Bland-Altman analysis [12] [20], Two One-Sided T-tests (TOST) [18], Intraclass Correlation Coefficients [23] | Quantitative assessment of agreement between methods | Measures bias, limits of agreement, and equivalence testing |

| Usability Metrics | System Usability Scale (SUS) [9], Computer System Usability Questionnaire (CSUQ) [18] | Standardized assessment of application user experience | Allows cross-study comparison of usability findings |

| MtTMPK-IN-1 | MtTMPK-IN-1, MF:C22H24N4O3, MW:392.5 g/mol | Chemical Reagent | Bench Chemicals |

| Mat2A-IN-3 | Mat2A-IN-3, MF:C24H16F5N5O3, MW:517.4 g/mol | Chemical Reagent | Bench Chemicals |

Mobile diet tracking applications offer distinct advantages over traditional dietary assessment methods, particularly through real-time data capture, reduced participant burden, and more objective logging capabilities. However, significant variability exists in the accuracy of nutrient estimates between platforms, with many applications demonstrating substantial errors in energy and macronutrient assessment.

When selecting mobile applications for research purposes, scientists should consider conducting pilot validation studies against reference methods specific to their population of interest. Particular attention should be paid to the food composition databases underlying applications, as discrepancies between these databases and local food supplies can significantly impact data accuracy. While mobile applications show promise for reducing participant burden and improving ecological validity, their implementation in scientific research requires careful validation and consideration of platform-specific limitations.

Implementing Digital Tools: Methodological Frameworks for Research and Clinical Settings

The use of mobile diet tracking applications in research settings presents a paradigm shift from traditional dietary assessment methods, offering reduced participant burden and real-time data collection. However, this transition requires rigorous validation against established research-grade methods to ensure data accuracy and reliability. Validation studies directly comparing mobile applications to traditional methods like weighed food records and 24-hour recalls provide critical evidence for researchers selecting appropriate digital tools [24]. The convergence of artificial intelligence, expansive food databases, and enhanced user interfaces has accelerated adoption, yet scientific rigor demands careful evaluation of underlying databases, usability metrics, and privacy frameworks before deployment in studies [25] [26].

This guide systematically compares mobile diet tracking technologies through the lens of research validation, providing experimental methodologies and comparative data to inform selection criteria for scientific investigations. We synthesize findings from controlled trials and usability studies to establish evidence-based recommendations for implementing these tools in research contexts while maintaining scientific standards.

Comparative Performance Analysis: Quantitative Data from Validation Studies

Database Accuracy and Nutrient Validation

Table 1: Database and Nutrient Accuracy Comparison Across Dietary Assessment Platforms

| Platform/App Name | Database Size & Features | Validation Method | Key Nutrient Correlation/Accuracy Findings | Reference |

|---|---|---|---|---|

| Cronometer | Tracks up to 84 nutrients; verified database with USDA and branded foods [27] | Comparison to standardized databases | High accuracy for micronutrients; food data carefully checked and approved [27] | [27] |

| Keenoa | AI-powered food recognition with dietitian verification [24] | vs. 3-day food diaries (3DFD) in RCT (N=72) | Significant differences for energy, protein, carbs, % fat, SFA, iron; acceptable for other nutrients [24] | [24] |

| MyFitnessPal | User-generated database; one of the largest available [28] [26] | User experience studies | Difficulties in food item selection (39.3%) and portion sizes (63.9%) reported by users [29] | [29] |

| NutriDiary | >150,000 items; integration of German standard database (BLS) & branded products [25] | Weighed food record comparison | Database structure allows for precise nutrient coding with 82 nutritional components [25] | [25] |

| PortfolioDiet.app | Food-based scoring system for specific diet pattern [30] | vs. 7-day weighed diet records in RCT (N=98) | Strong correlation with reference (r=0.94, p<0.001); significant LDL-C reduction association [30] | [30] |

Usability and Participant Burden Metrics

Table 2: Usability and Acceptance Findings from Experimental Studies

| Platform/App Name | Study Population | Usability Assessment Method | Key Usability Findings | Completion Time/Burden |

|---|---|---|---|---|

| NutriDiary [25] | 74 participants (experts & laypersons) | System Usability Scale (SUS) | Median SUS: 75 (IQR 63-88) indicating "good" usability [25] | Median 35 min (IQR 19-52) for 1-day record [25] |

| EatsUp [31] | 30 adolescents (16±0.70 years) | User Experience Questionnaire (UEQ) | "Excellent" in 5/6 parameters; "Good" for Perspicuity (ease of understanding) [31] | 90% used app ≥7 consecutive days [31] |

| Keenoa [24] | 72 Canadian adults | System Usability Scale (SUS) | 34.2% preferred Keenoa vs. 9.6% preferred traditional food diary [24] | N/A |

| MyFitnessPal [29] | 61 university students | 3-week usability assessment | 93.4% reported easy to use; 91.8% reported it helped change dietary intake [29] | N/A |

Experimental Protocols for App Validation

Protocol 1: Relative Validity Against Traditional Food Records

The randomized crossover design employed by Cohen et al. provides a robust template for validating mobile dietary assessment applications against traditional methods [24]. This methodology effectively controls for intra-individual variation in dietary intake while allowing direct comparison between assessment tools.

Study Population: Recruit 70-100 participants to ensure adequate statistical power, applying inclusion criteria of smartphone ownership and exclusion of nutrition professionals or those with conditions significantly affecting dietary intake [24].

Procedure:

- Randomization: Assign participants to begin with either mobile app or traditional food diary (3DFD) using computer-generated sequence

- Recording Period: Implement two 3-day recording periods (including one weekend day) with washout period between conditions

- Training: Provide standardized portion size estimation training using visual aids (e.g., Dietitian's of Canada Handy Guide)

- Data Collection: For app condition, enable automatic food recognition while maintaining capacity for manual entry

- Data Verification: Implement expert review (registered dietitians) of all entries to correct misidentified items and portion errors [24]

Statistical Analysis:

- Conduct nutrient-specific comparisons using Pearson correlation coefficients, cross-classification, and Bland-Altman analysis for agreement assessment

- Calculate percent difference between methods with ≤10% generally considered acceptable

- Account for multiple comparisons using appropriate corrections (e.g., Bonferroni) [24]

Protocol 2: Usability Assessment in Target Populations

Usability testing should reflect the specific study population, as demonstrated by the NutriDiary evaluation which included both experts and laypersons [25]. This approach identifies challenges specific to user technical proficiency.

Study Design:

- Participant Selection: Stratify recruitment to include representatives from anticipated user groups (e.g., different age groups, technical proficiency levels)

- Standardized Tasks: Implement structured recording tasks including predefined sample meals with both generic and branded food items [25]

- Longitudinal Engagement: Assess sustainability with multi-day recording periods (e.g., 7 consecutive days) to identify novelty effect decay [31]

Metrics and Instruments:

- System Usability Scale (SUS): Standardized 10-item questionnaire producing score from 0-100; scores >68 considered above average [25]

- User Experience Questionnaire (UEQ): Assesses six dimensions (Attractiveness, Perspicuity, Efficiency, Dependability, Stimulation, Novelty) with benchmark comparisons [31]

- Objective Measures: Record time per food entry, data completeness rates, and participant retention [25]

Analysis:

- Calculate descriptive statistics for all usability metrics

- Use regression models to identify participant characteristics (age, technical background) associated with usability scores [25]

- Conduct qualitative analysis of open-ended feedback to identify specific interface challenges

Research Reagent Solutions: Essential Materials for Dietary App Validation

Table 3: Essential Materials and Tools for Dietary Assessment Validation Studies

| Research Tool | Function/Purpose | Implementation Example |

|---|---|---|

| Weighed Food Scales | Gold-standard reference method for food intake quantification | 7-day weighed food records in Portfolio Diet validation [30] |

| Standardized Food Atlases | Visual portion size estimation aids | Dietitian's of Canada Handy Guide to Servings Sizes [24] |

| System Usability Scale (SUS) | Standardized usability assessment with 10-item questionnaire | NutriDiary evaluation (median SUS: 75) [25] |

| User Experience Questionnaire (UEQ) | Multidimensional usability assessment across 6 parameters | EatsUp evaluation in adolescent population [31] |

| Recovery Biomarkers | Objective validation of energy intake reporting | Doubly labeled water, urinary nitrogen (referenced in [32]) |

| Nutrient Analysis Software | Reference standard for nutrient calculation | ESHA Food Processor SQL used in Portfolio Diet study [30] |

Workflow Visualization: App Validation Methodology

Technical Implementation Framework

Database Architecture and Integration Standards

Research-grade diet tracking applications require robust database architectures that integrate multiple data sources while maintaining accuracy. The NutriDiary framework exemplifies this approach with its dual-database structure comprising a nutrient database and product information database [25].

Core Components:

- Standardized Nutrient Database: Foundation based on established national food composition databases (e.g., German BLS in NutriDiary, USDA in Cronometer) providing core nutritional values for generic foods [25] [27]

- Branded Product Integration: Barcode-scanning functionality with continuous expansion through manufacturer data and open databases (Open Food Facts, GS1 Germany) [25]

- Quality Control Mechanisms: Manual verification processes by trained dietitians to match products with appropriate nutrient profiles, particularly for custom or regional foods [25] [24]

Technical Implementation:

- Implement automated data validation checks against standardized ranges for nutrient values

- Establish version control for database updates with documentation of changes

- Create audit trails for user-generated content with flagging systems for implausible entries

Privacy and Compliance Framework

Research applications must navigate complex privacy regulations while maintaining data integrity. The implementation should include:

Data Protection Measures:

- Server Infrastructure: Host data on secure university or research institution servers with appropriate encryption [25]

- Consent Management: Implement granular consent procedures specifying data usage purposes, particularly for image collection [31] [33]

- Minimal Data Collection: Collect only essential data elements required for research objectives

Compliance Considerations:

- Regional regulations (GDPR, HIPAA) based on study population location

- Ethical review board approval for data collection methods, particularly for vulnerable populations [31]

- Data anonymization procedures for research analysis while maintaining ability for individual feedback

Based on comparative validation evidence, researchers should prioritize applications with verified databases, demonstrated usability in their target population, and transparent privacy compliance. Cronometer provides exceptional micronutrient tracking suitable for detailed nutritional studies [27], while specialized tools like PortfolioDiet.app offer validated scoring for specific dietary patterns [30]. For general monitoring, apps with dietitian verification features like Keenoa show acceptable agreement with traditional methods for many nutrients [24].

Usability metrics should align with study population characteristics, considering factors like age-specific design preferences evidenced in adolescent studies [31]. Ultimately, selection requires balancing precision requirements with participant burden, recognizing that even validated apps may show nutrient-specific variations in accuracy [24]. Future development should focus on enhancing image recognition capabilities [33] [26] while maintaining the scientific rigor established through these validation frameworks.

In nutritional research, the accuracy of dietary intake data is fundamental to understanding diet-health relationships. Modern mobile diet tracking applications leverage various data capture modalities—text search, barcode scanning, and image recognition—to collect this crucial information. These digital methods are increasingly validated against research-grade techniques like 24-hour dietary recalls and weighed dietary records to assess their reliability for scientific use [25]. This guide provides an objective comparison of these technologies, focusing on their performance metrics, underlying experimental protocols, and practical implementation for researchers and drug development professionals.

Comparative Performance Analysis of Data Capture Modalities

The table below summarizes the core performance characteristics, optimal use cases, and validation data for the three primary data capture modalities.

| Feature | Text Search | Barcode Scanning | Image Recognition |

|---|---|---|---|

| Primary Mechanism | Keyword-based query matching on databases [34] | Optical decoding of barcode patterns [35] [36] | AI-based analysis of visual features and patterns [37] |

| Key Strength | Effective for retrieving information from large, structured text corpora [34] | High speed and accuracy for standardized, packaged foods [25] | Direct identification of non-packaged foods and portion sizes |

| Typical Speed | Sub-second query response on large datasets [34] | Less than 0.04 seconds per scan [35] | Varies by model complexity; can be real-time (e.g., YOLO) [37] |

| Key Performance Metrics | Query latency, recall, precision [34] | Reading Rate, Precision, Misread Rate [38] | Classification Accuracy, Feature Detection Precision [37] |

| Best Suited For | Free-text meal entries, searching recipe databases | Identifying branded, packaged food products with barcodes [25] | Identifying unpackaged foods, estimating volume, and verifying barcode scans [37] [25] |

| Notable Validation | Used in app databases for food entry [25] | NuMob-e-App vs. 24-hr recall: Good validity for energy, carbs, protein [39] | Model accuracy benchmarks (e.g., ResNet, Inception on ImageNet) [37] |

Barcode Scanning: Performance and Experimental Protocols

Among the data capture modalities, barcode scanning is the most mature and widely integrated into dietary apps like NutriDiary and NuMob-e-App [25] [39]. Its performance is critical for user experience and data accuracy.

Performance Benchmarking Data

Independent, third-party testing provides crucial performance data for selecting a barcode scanning engine. The following table summarizes results from a benchmark study using three public datasets of barcode images with varying quality levels [38].

| Dataset (Quality Focus) | Barcode Engine | Reading Rate | Precision | Notes |

|---|---|---|---|---|

| Artelab (In-Focus) [38] | Dynamsoft Barcode Reader | 100% | 100% | Excellent performance on clear images. |

| Commercial SDK A | 91.63% | 100% | Good performance, slightly lower reading rate. | |

| ZXing-CPP (Open Source) | 82.36% | 99.44% | Moderate performance with one misread. | |

| pyZbar (Open Source) | 89.77% | 99.48% | Good reading rate with one misread. | |

| Artelab (Out-of-Focus) [38] | Dynamsoft Barcode Reader | 81.86% | 100% | Maintains high precision on blurry images. |

| Commercial SDK A | 79.07% | 100% | Robust performance, but reading rate drops. | |

| ZXing-CPP (Open Source) | 10.23% | 91.67% | Performance severely degraded. | |

| pyZbar (Open Source) | 13.95% | 78.95% | Low reading rate and higher misreads. | |

| Muenster (Real-Life) [38] | Dynamsoft Barcode Reader | 96.96% | 100% | Handles real-world distortions effectively. |

| Commercial SDK A | 93.26% | 100% | Strong performance in complex conditions. | |

| ZXing-CPP (Open Source) | 75.14% | 99.87% | One misread observed. | |

| pyZbar (Open Source) | 70.59% | 95.63% | Lower reading rate and multiple misreads. |

Detailed Experimental Protocol for Barcode Scanning

The benchmark data in the previous section was derived from a rigorous experimental methodology, which can be adapted for in-house validation [38].

- 1. Barcode Engine Selection: The test should include a mix of commercial SDKs (e.g., Dynamsoft, Scandit) and open-source alternatives (e.g., ZXing, ZBar) to provide a comprehensive performance landscape.

- 2. Dataset Curation: Use standardized, publicly available datasets that reflect real-world conditions. Key datasets include:

- Artelab Medium Barcode 1D Collection: Contains images taken with and without autofocus, testing robustness to blur [38].

- Muenster BarcodeDB: Includes real-life images with distortions like reflections and uneven lighting [38].

- DEAL Lab’s Barcode Dataset: Comprises EAN-13 barcodes with various sizes and formats [38].

- 3. Performance Metrics Calculation:

- 4. Execution and Analysis: Run the selected engines against all images in the curated datasets. Manually verify and correct the annotations (ground truth) to ensure metric accuracy. Analyze results to determine which engine performs best under the specific conditions relevant to the research context (e.g., low light, damaged barcodes) [38].

Barcode scanning benchmark workflow

Image Recognition and Text Search in Diet Tracking

Image Recognition in Dietary Assessment

Image recognition (IR) technology is a field of computer vision that uses deep learning models to interpret visual content [37]. In diet tracking, it has two primary applications:

- Food Identification: Convolutional Neural Networks (CNNs) like ResNet, Inception, and VGG are trained on large datasets of food images to classify the type of food [37]. Object detection models such as YOLO (You Only Look Once) can identify multiple food items within a single image in real-time [37].

- Portion Size Estimation: By analyzing the visual properties of the food and using reference objects, IR systems can estimate the volume or weight of the consumed portion.

A major advantage of image recognition is its ability to capture context. Advanced systems can go beyond simple identification to provide "crucial cultural context and usage nuances," which is vital for accurately interpreting dietary intake [40]. However, its accuracy is highly dependent on the quality and diversity of its training data and environmental factors like lighting and angle.

Image recognition workflow for diet tracking

Text Search in Dietary Apps

Text search is a foundational modality in diet apps, typically implemented in two ways:

- Database Search: This is the most common method. Users type the name of a food (e.g., "whole wheat bread"), and the app searches a backend food and nutrient database to return matching items. The usability of this method hinges on the database's comprehensiveness and the search algorithm's effectiveness [25].

- Free-Text Entry with NLP: For foods not found in the database, users can enter a free-text description. Researchers can then process these entries using Natural Language Processing (NLP) techniques for subsequent coding and analysis [25].

For large-scale applications, modern full-text search technologies in databases (like Azure SQL's Full-Text Search) enable efficient querying of large text corpora, returning results in sub-second times even on billions of rows, a significant improvement over legacy methods that could take a full business day [34].

Validation in Research: A Case Study

The validity of mobile diet tracking apps is often tested against established research-grade methods like the 24-hour dietary recall, which is considered a reference standard [39].

A 2025 study validated the NuMob-e-App, a tablet-based dietary record app for older adults, against a structured 24-hour dietary recall [39]. The study involved 104 independently living adults with a mean age of 75.8 years. Participants recorded their intake in the app for three consecutive days [39].

- Methodology: Nutrient intake was analyzed for energy, macronutrients, and food groups. Data were analyzed for equivalence using Two One-Sided Tests (TOST), agreement using Intraclass Correlation Coefficients (ICC), and systematic differences using Bland-Altman plots [39].

- Findings: The app demonstrated good relative validity for assessing energy, carbohydrate, and protein intake, with ICCs for macronutrients ranging between 0.677 and 0.951 [39]. While equivalence was not achieved for all 44 variables tested, the study supported the app's potential for preventive dietary self-monitoring in seniors, despite a general tendency toward underestimation [39].

Another app, NutriDiary, was evaluated for usability rather than validity. Its evaluation study reported a median System Usability Scale (SUS) score of 75, which indicates good usability, and found that most participants preferred it over traditional paper-based methods [25]. This highlights the importance of user acceptance in ensuring the fidelity of collected data.

The Scientist's Toolkit: Research Reagent Solutions

The table below details key technological components and their functions for implementing and validating data capture modalities in dietary research.

| Tool or Technology | Primary Function | Example Use in Dietary Research |

|---|---|---|

| Barcode Scanning SDK | Software library that enables barcode scanning via device cameras. | Integrating packaged food identification into a custom research app. Examples: Dynamsoft, Scandit [38] [36]. |

| Full-Text Search Engine | Database technology for efficient natural language text querying. | Powering the food database search functionality within a diet tracking application [34]. |

| Pre-trained CNN Models | AI models (e.g., ResNet, Inception) pre-trained on large image datasets. | Serving as a starting point for transfer learning to build custom food recognition models [37]. |

| 24-Hour Dietary Recall | A structured interview to capture previous day's dietary intake. | Used as a reference standard to validate the accuracy of a new mobile diet tracking app [39]. |

| System Usability Scale (SUS) | A standardized questionnaire for measuring perceived usability. | Quantifying the usability and user acceptance of a newly developed dietary app in a pilot study [25]. |

| Optical Character Recognition (OCR) | Software that extracts text from images. | Used in apps like NutriDiary's "NutriScan" to capture product information from packaging when a barcode is not recognized [25]. |

| Topoisomerase I inhibitor 3 | Topoisomerase I Inhibitor 3|RUO|DNA Replication Research | Topoisomerase I Inhibitor 3 stabilizes DNA-enzyme complexes, inducing apoptosis in cancer cells. For Research Use Only. Not for human use. |

| Antileishmanial agent-11 | Antileishmanial agent-11, MF:C27H24ClN3O4, MW:489.9 g/mol | Chemical Reagent |

Text search, barcode scanning, and image recognition each offer distinct advantages and face specific challenges in the context of mobile dietary assessment. Barcode scanning is highly accurate and efficient for packaged foods, with performance metrics that can be objectively benchmarked. Image recognition holds promise for non-packaged foods and portion estimation but is complex to implement robustly. Text search remains a critical fallback and primary entry method. Research-grade validation, as seen with the NuMob-e-App, is essential to establish the scientific credibility of these digital tools. The choice of technology should be guided by the target population, the specific research questions, and a rigorous evaluation of the performance characteristics of each modality.

The validation of mobile diet-tracking apps against research-grade methods is a critical frontier in nutritional science. For researchers, clinicians, and drug development professionals, understanding the protocols that ensure data quality is paramount for integrating digital tools into evidence-based practice. Recent studies highlight a common challenge: systematic underestimation of energy intake, with one meta-analysis reporting a pooled average discrepancy of -202 kcal/day compared to alternative methods [41]. This article compares experimental data and methodologies from recent validation studies, providing a scientific framework for assessing and improving the data quality of mobile dietary assessment tools.

Quantitative Outcomes of App Validation Studies

Recent validation studies demonstrate variable performance in energy and nutrient intake assessment across different digital tools and population groups. The following table synthesizes key quantitative findings from peer-reviewed research.

Table 1: Key Outcomes from Dietary App Validation Studies

| Study & Tool | Population | Reference Method | Key Outcome Metrics | Main Findings |

|---|---|---|---|---|

| NuMob-e-App Validation [39] | 104 older adults (Mean age 75.8) | 24-hour dietary recall | Equivalence in 20/44 variables; ICC for macronutrients: 0.677-0.951 | Good relative validity for energy, carbs, protein; general tendency for underestimation |

| Libro App Validity Study [41] | 47 young people vulnerable to eating disorders | Self-administered 24h recall (Intake24) | Mean energy intake difference: -554 kcal (p<0.001); ICC: 0.85 | Good test-retest reliability but significant underreporting of energy |

| Interactive Voice Response (IVR) [42] | 156 women in rural Uganda | Weighed Food Record (WFR) | MDD-W: 21.6% (IVR) vs 15.5% (WFR); kappa=0.52 | Moderate agreement for dietary diversity indicators |

| NutriDiary Usability Evaluation [25] | 74 participants (Experts & Laypersons) | Pre-defined sample meal entry | Median SUS Score: 75 (IQR 63-88); Completion time: 35 min (IQR 19-52) | Good usability; older age predicted lower usability scores |

Experimental Protocols for Training and Data Collection

Protocol 1: Co-Design and Customization for Vulnerable Populations

The validation study for the Libro app employed a meticulous protocol designed for young people vulnerable to eating disorders, emphasizing psychological safety and data accuracy [41].

Participant Recruitment and Consultation:

- Participants were recruited online through a mental health research charity to ensure a relevant population sample.

- A youth consultation group (n=3, aged 23-26) with prior FR app experience, including two with eating disorder history, provided input on program design.

- Consultations were conducted remotely via Microsoft Teams without camera or voice recordings to minimize participant burden and maintain privacy.

Program Customization Based on Feedback:

- Instructions were provided in both written and video formats to accommodate different learning preferences.

- Notifications were set at 4-5 per day with customizable timing to prompt entries without being intrusive.

- Prompts were neutrally phrased (e.g., addressing commonly forgotten ingredients like sugar and sauces) to minimize psychological triggers.

- Trackers and potentially triggering metrics were disabled during the study period.

- Virtual support options were integrated to improve user comfort and data quality.

Validation Study Design:

- A cross-over design was implemented where participants recorded intake over 3 non-consecutive weekdays and 1 weekend day with both Libro and Intake24.

- The primary outcome was concordance of total energy intake between methods, with secondary outcomes focusing on specific nutrients.

Protocol 2: Usability-Focused Training for Older Adults

The NuMob-e-App validation study implemented specialized protocols for adults aged 70 and above, addressing unique challenges in this demographic [39].

Structured Training and Evaluation:

- 104 independently living adults (mean age 75.8±4.1 years; 58% female) participated in the validation.

- Participants recorded dietary intake on three consecutive days using the app while parallel structured 24-hour dietary recalls were conducted via telephone.

- Data collection focused on nutritional intake for energy, macronutrients, and food groups defined by the German Nutrition Society.

Statistical Analysis for Validity:

- Data were analyzed for equivalence using Two One-Sided Tests (TOST).

- Agreement was assessed using Intraclass Correlation Coefficients (ICC).

- Systematic differences were identified using Bland-Altman plots.

- The analysis specifically accounted for the tendency toward underestimation observed in most variables.

Protocol 3: Low-Literacy Adapted IVR Training

The Interactive Voice Response (IVR) study in rural Uganda developed a novel protocol for low-literacy populations using basic mobile phones [42].

Technology Adaptation:

- Automated IVR with push-button response on basic mobile phones collected semi-quantitative list-based 24-hour dietary recalls.

- The method was specifically designed for low-literate, rural women in a sub-Saharan African context.

- 156 randomly selected women participated during the wet season, with most (74.4%) successfully completing the IVR protocol.

Validation Metrics:

- Inter-method agreement was assessed by comparing mean women's dietary diversity scores (WDDS).

- Percentage achieving minimum dietary diversity for women (MDD-W) was calculated.

- Consumption of unhealthy foods and beverages was tracked.

- Comparison was made against the same-day gold standard observed weighed food records (WFR).

Methodologies for Data Verification and Quality Control

Database Structure and Verification Systems

The NutriDiary app exemplifies a robust approach to data verification through its sophisticated database architecture and entry validation [25].

Table 2: NutriDiary Database Verification Components

| Component | Description | Quality Control Function |

|---|---|---|

| Core Nutrient Database | Adaptation of LEBTAB database with ~19,000 generic/branded items with 82 nutrients | Provides verified nutrient values based on German national standard food database |

| Product Information Database | Enhanced with branded products from manufacturers and open databases | Enables barcode scanning and product matching |

| NutriScan Process | Standardized photo capture of packaging (brand name, barcode, ingredients, nutrients) | Optical character reading automates data extraction for new products |

| Recipe Simulation | Manual estimation of nutrient values using ingredient lists and declared contents | Dietitians match or simulate nutrients for continuous database expansion |

Automated and Manual Verification Processes:

- When participants encounter unscannable products, they are guided through a standardized NutriScan process to capture all relevant product information.

- This data is sent to a server where optical character reading automates data extraction.

- Trained dietitians then match detailed nutrient data from similar products or estimate values through recipe simulation.

- This hybrid approach continuously updates and expands the underlying database while maintaining quality control.

Multi-Method Entry and Verification

The evolution of commercial apps demonstrates increasing sophistication in entry verification through multiple input modalities [26].

AI-Powered Entry Systems:

- Leading apps like Fitia, Cronometer, and MyFitnessPal have implemented multi-modal tracking (photo, voice, text) to reduce single-method errors.

- Food scanners estimate nutritional information from photos, though accuracy remains context-dependent.

- Voice logging enables hands-free entry for real-time recording.

- Database matching algorithms cross-verify entries against verified food databases.

Database Quality Variations:

- Apps with professionally verified databases (Fitia, Cronometer) demonstrate higher accuracy than those relying on user-generated content (MyFitnessPal) [43].

- Regular database enhancements through AI improve search relevance and reduce inaccuracies.

- The integration of localized, nutritionist-verified databases addresses regional food variations.

Visualizing Participant Training and Data Verification Workflows

The following diagrams illustrate the structured workflows for training participants and verifying dietary data entries, synthesized from the analyzed validation studies.

Diagram 1: Participant Training and Data Verification Workflows

The Researcher's Toolkit: Essential Materials for Validation Studies

Table 3: Research Reagent Solutions for Dietary App Validation

| Tool/Category | Specific Examples | Research Application & Function |

|---|---|---|

| Reference Standard Methods | 24-hour Dietary Recall, Weighed Food Records, Recovery Biomarkers | Provides gold-standard comparison for validating mobile app data [39] [42] |

| Statistical Analysis Packages | SAS, R, SPSS, STATA | Performs equivalence testing (TOST), ICC agreement analysis, Bland-Altman plots [39] [44] |

| Digital Data Collection Platforms | SurveyCTO, engageSPARK, NutriDiary Researcher Website | Enables remote study management, settings configuration, and data download [25] [42] |

| Usability Assessment Tools | System Usability Scale (SUS), Evaluation Questionnaires | Quantifies user experience and identifies interface barriers [25] |

| Food Composition Databases | German National Standard Database (BLS), UK Nutrient Databank, USDA Database | Provides verified nutrient values for accuracy assessment [25] [41] |

| Quality Control Protocols | NutriScan Process, Recipe Simulation, Manual Dietitian Review | Ensures database accuracy and handles unmatched food items [25] |

| Antitubercular agent-27 | Antitubercular agent-27, MF:C14H8BrN3O3, MW:346.13 g/mol | Chemical Reagent |

| Isodihydroauroglaucin | Isodihydroauroglaucin |

The validation of mobile diet-tracking apps against research-grade methods requires meticulous attention to participant training, database verification, and appropriate statistical analysis. Current evidence indicates that while digital tools show promise for dietary assessment, systematic underestimation of energy intake remains a significant challenge [41]. The protocols detailed here provide researchers with evidence-based methodologies for ensuring data quality across diverse populations, from older adults in Germany [39] to low-literacy women in rural Uganda [42]. Future developments in AI integration and database verification hold potential for bridging the accuracy gap between consumer-grade apps and research-grade methods, enabling more reliable dietary assessment in both clinical and research settings.

Accurate dietary assessment is fundamental to nutritional epidemiology, yet traditional methods like paper-based food diaries are burdensome and prone to error [45]. The proliferation of smartphone technology presents an opportunity to transform dietary data collection in research settings. However, many commercially available diet-tracking apps are developed for consumer self-tracking and lack the rigorous validation required for scientific studies [45] [3]. This case study examines NutriDiary, a smartphone application specifically developed for collecting weighed dietary records (WDRs) in epidemiological cohorts. We evaluate its usability and acceptability, contextualizing its performance against other dietary assessment tools and detailing the experimental protocols used for its validation, thereby contributing to the broader thesis on validating mobile apps against research-grade methods.

NutriDiary: Application Description and Core Architecture

NutriDiary was conceived as a digital alternative to paper-based WDRs within German nutritional epidemiological studies [46] [45]. Its design incorporates multiple food entry pathways to enhance user compliance and data accuracy:

- Text Search and Selection: Users can search and select items from the underlying database.

- Barcode Scanning: An integrated scanner allows quick entry of packaged foods.

- Free Text Entry: For foods not found in the database, users can enter items manually [45].